Sample Data for the Snowflake App

MEHRWERK provides sample data that you can use with the Snowflake App. This tutorial will walk you through the steps to install the sample data and connect it to the mining app.

Please note that integrating the mined data from Snowflake into your preferred BI platform is outside the scope of this section.

Prerequisites

To follow this guide, the following requirements must be met.

- You have a Snowflake Account in which you have all the necessary permissions:

CREATE DATABASE,CREATE APPLICATIONandIMPORT SHAREto install the sample data sets and the applicationACCOUNTADMINto configure the application. See also in Installation and Update.- The sample data sets and the mpmX Process Mining App were shared with your account.

- For this, we need your Snowflake account organization, account name and region ID.

Sample data

Get the shared sample data

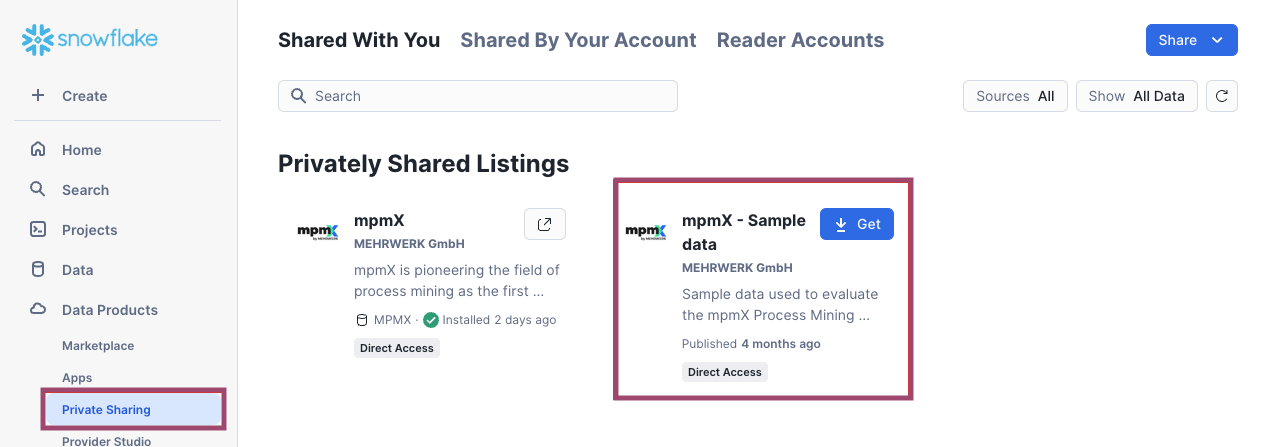

Log in to your Snowflake account. Make sure that your current role has the CREATE DATABASE and IMPORT SHARE privileges. Navigate to Data Products » Private Sharing. Find the mpmX - Sample data listing and click the Get button.

Please note that it might be possible that the data needs to be replicated to your region first. In this case, Snowflake will display a corresponding message when you hit the Get button. You will be notified by email when the replication has finished. This might take about 10 minutes.

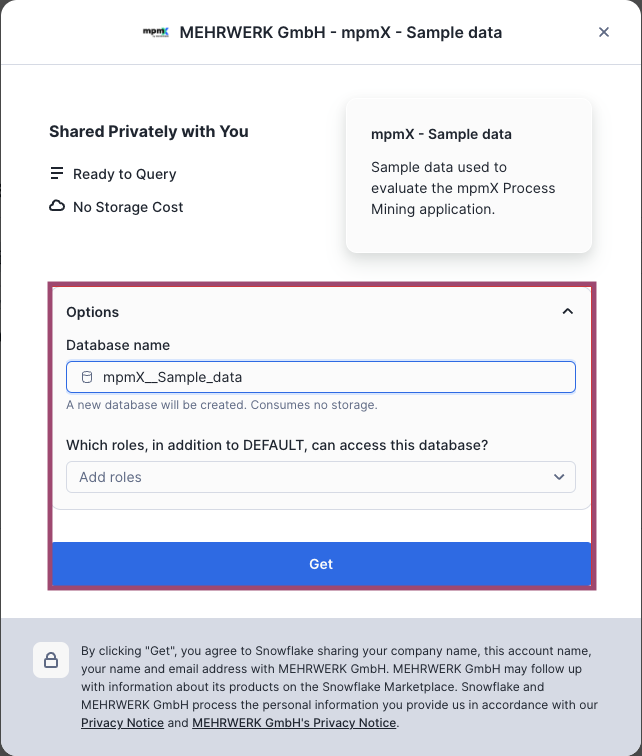

In the following screen, you may change the database name and roles that can access the database. In this tutorial, we will proceed with the default values. Click the Get button.

Copy the sample data into a database in your account

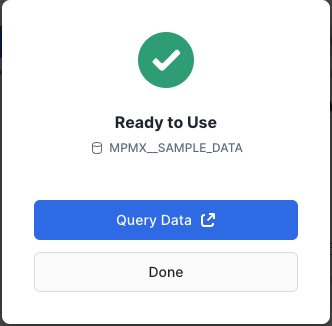

To access the data from within the mpmX Process Mining App, you need to copy the data sets to a database in your Snowflake Account. You can do this by running the following statements in a Snowsight Worksheet. A click on the Query Data Button in the above screen will take you there. Unfortunately, it is not possible to select the shared data directly from within the app.

Make sure that the role used to install the app later (see below) has the necessary permissions on that database. Otherwise you will not be able to select the created tables from within the app. We discourage using the ACCOUNTADMIN role here but suggest to create a dedicated one for the app with the appropriate permissions set.

In this tutorial, we create a database TESTDATA with a schema EVENT_LOGS. Then we copy the sample data tables into the EVENT_LOGS schema.

USE ROLE <role_to_install_app>;

CREATE DATABASE TESTDATA;

USE DATABASE TESTDATA;

CREATE SCHEMA EVENT_LOGS;

USE SCHEMA EVENT_LOGS;

BEGIN

CREATE TABLE TESTDATA.EVENT_LOGS.LOGISTICS_EVENT_LOG AS(

SELECT * FROM MPMX__SAMPLE_DATA.EVENT_LOGS.LOGISTICS_EVENT_LOG

);

CREATE TABLE TESTDATA.EVENT_LOGS.LOGISTICS_CASE_DIMENSIONS AS(

SELECT * FROM MPMX__SAMPLE_DATA.EVENT_LOGS.LOGISTICS_CASE_DIMENSIONS

);

END;

BEGIN

CREATE TABLE TESTDATA.EVENT_LOGS.P2P_CASE_DIMENSIONS AS(

SELECT * FROM MPMX__SAMPLE_DATA.EVENT_LOGS.P2P_CASE_DIMENSIONS

);

CREATE TABLE TESTDATA.EVENT_LOGS.P2P_EVENT_LOG AS(

SELECT * FROM MPMX__SAMPLE_DATA.EVENT_LOGS.P2P_EVENT_LOG

);

END;

BEGIN

CREATE TABLE TESTDATA.EVENT_LOGS.TICKET_LOG_CASE_DIMENSIONS AS(

SELECT * FROM MPMX__SAMPLE_DATA.EVENT_LOGS.TICKET_LOG_CASE_DIMENSIONS

);

CREATE TABLE TESTDATA.EVENT_LOGS.TICKET_LOG_EVENT_DIMENSIONS AS(

SELECT * FROM MPMX__SAMPLE_DATA.EVENT_LOGS.TICKET_LOG_EVENT_DIMENSIONS

);

CREATE TABLE TESTDATA.EVENT_LOGS.TICKET_LOG_EVENT_LOG AS(

SELECT * FROM MPMX__SAMPLE_DATA.EVENT_LOGS.TICKET_LOG_EVENT_LOG

);

END;

Description of the provided data sets

The shared sample data sets includes different data sets, including event logs and event and case dimensions.

P2P sample data

This data set contains sample data for object centric process mining of a typical P2P process. It has the following characteristics

- # Variants: 5,276

- # Objects: 4

- Order

- Order Item

- Goods

- Invoice

- # Cases: 422,413

- # Activities: 42

- # Events: 783,723

The data provided includes an event log and case dimensions.

Ticket Log sample data

This data set contains sample data for object centric process mining of some support ticket process. It has the following characteristics

- # Variants: 185

- # Objects: 2

- Customer

- Support

- # Cases: 9,156

- # Activities: 10

- # Events: 21,229

The data provided includes an event log, case dimensions and event dimensions.

Logistics sample data

This data set contains sample data for object centric process mining of a logistics process. The data was taken from here and transformed into the shape our process mining app expects. It has the following characteristics

- # Variants: 35

- # Objects: 7

- Customer Order

- Transport Document

- Vehicle

- Container

- Handling Unit

- Truck

- Forklift

- # Cases: 13,913

- # Activities: 14

- # Events: 35,372

The data provided includes an event log and case dimensions.